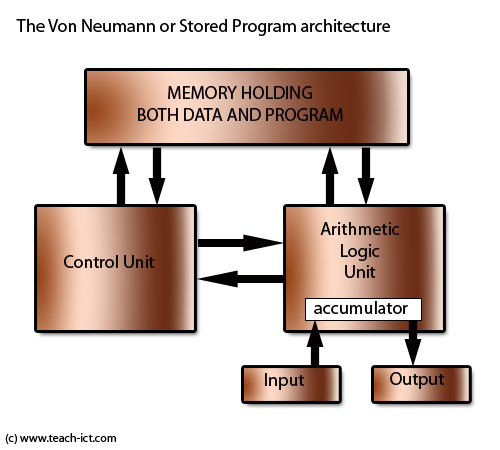

Von Neumann-

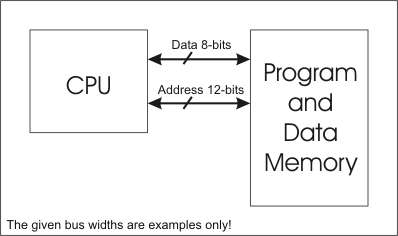

- the computer consisted of a CPU, memory and I/O devices. The program is stored in the memory. The CPU fetches an instruction from the memory at a time and executes it.

- Instructions are executed sequentially- 5this makes the process VERY SLOW

- instruction are executed sequentially as controlled by a program counter

- To increase the speed, parallel processing of computer have been developed in which serial CPU’s are connected in parallel to solve a problem

- uses a processing unit and a single separate storage structure to hold both instructions and data.

- Instructions and data share the same memory space.

- Instructions and data are stored in the same format

- A single control unit follows a linear fetch decode execute cycle.

- One instruction at a time.

- Registers are used as fast access to instructions and data

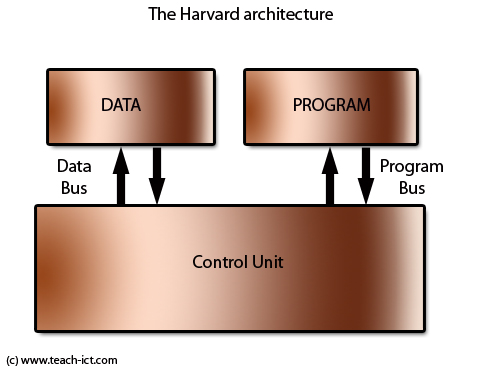

Harvard-

- Splits memory into two parts - one for data and the other for instructions.

- The problem with the Harvard architecture is complexity and cost.

- Instead of one data bus there are now two

- Having two buses means more pins on the CPU, a more complex motherboard and doubling up on RAM chips as well as more complex cache design. This is why it is rarely used outside the CPU.

- sometimes used within the CPU to handle its caches.

- Instructions and data are stored in separate memory units.

- each instruction and piece of data has its own bus.

- Reading and writing data can be done at the same time as fetching an instruction

- Used by RISC processors

CISC Vs RISC:

RISC- Reduced instruction set computers

CISC - Complex instruction set computers

There are two types of fundamental CPU architecture: CISC and RISC

CISC:

CISC is the most prevalent and established microprocessor architecture

- A large number of complicated instructions

- Can take many clock cycles for a single instruction

- Tend to be slower than RISC

- Wider variety of instructions and addressing modes

- Less reliant on registers

- CISC cannot support pipelining

RISC:

RISC is a relative new architecture

- RISC architectures only support a small number o0f instructions

- All instructions are simple

- When executed these instructions can be completed in 1 clock cycle

- More instructions are needed to complete a given task but each instruction is executed extremely quickly

- More efficient at processing as there is no unused instructions

- RISC need a greater number of registers to provide faster access to data when programs are being executed.- quick access to data as the instructions are processed.

- RISC can support pipelining

CISC

|

RISC

|

Large instruction set

|

Compact instruction set

|

Complex, powerful instructions

|

Simple hard-wired machine code and control unit

|

Instruction sub-commands micro-coded in on board ROM

|

Pipelining of instructions

|

Compact and versatile register set

|

Numerous registers

|

Numerous memory addressing options for operands

|

Compiler and IC developed simultaneously

|

COMPARISON:

| CISC | RISC |

|---|---|

| Has more complex hardware | Has simpler hardware |

| More compact software code | More complicated software code |

| Takes more cycles per instruction | Takes one cycle per instruction |

| Can use more RAM to store intermediate results | Can use less RAM movements as there are more registers available |

| Compiler can use a single machine instruction | Compiler has to use a number of instructions |

| Uses less RAM to store a program | Has to use more RAM as it uses more instructions |

| Legacy - there is about 40 years worth of coding that still needs to run on a cpu. | The historic need to run previously coded software is much less. |

CPU Time (Execution Span) = (number of instructions)*(average clocks per instruction)*(seconds per cycle)

RISC attempts to reduce execution time by reducing average clock cycles per instruction.

CISC attempts to reduce execution time by reducing the number of instructions per second.

RISC

|

CISC

|

Simple instructions

|

Complex instructions (often made up of many simpler instructions)

|

Fewer instructions

|

A large range of instructions

|

Fewer addressing modes

|

Many addressing modes

|

Only LOAD and STORE instructions can access memory

|

Many instructions can access memory

|

Multicore Systems:

- The classic von Neumann architecture uses only a single processor to execute instructions. In order to improve the computing power of processors, it was necessary to increase the physical complexity of the CPU. Traditionally this was done by finding new, ingenious ways of fitting more and more transistors onto the same size chip.

- Moore’s Law, predicted that the number of transistors that could be placed on a chip would double every two years.

- However, as computer scientists reached the physical limit of the number of transistors that could be placed on a silicon chip, it became necessary to find other means of increasing the processing power of computers. One of the most effective means of doing this came about through the creation of multicore systems (computers with multiple processors)

- Multicore-processors are now very common and popular, the processor will have several cores allowing for multiple programs or threads top be run at once.

Advantages and Disadvantages of Multicore-processors:

Advantages

|

Disadvantages

|

More jobs can be done in a shorter time because they are executed simultaneously.

|

It is difficult to write programs for multicore systems, ensuring that each task has the correct input data to work on.

|

Tasks can be shared to reduce the load on individual processors and avoid bottlenecks.

|

Results from different processors need to be combined at the end of processing, which can be complex and adds to the time taken to execute a program.

|

Not all tasks can be split across multiple processors.

|

Parallel systems:

- One of the most common types of multicore system

- In parallel processing, two or more processors work together to perform a single task.

- They tend to be referred to as dual-core (two processors) or quad-core (four processors) computers.

- The task is split into smaller sub-tasks (threads).

- These tasks are executed simultaneously by all available processors (any task can be processed by any of the processors).

- This hugely decreases the time taken to execute a program, but software has to be specially written to take advantage of these multicore systems.

Parallel computing systems are generally placed in one of three categories:

- Multiple instruction, single data (MISD) systems have multiple processors, with each processor using a different set of instructions on the same set of data.

- Single instruction, multiple data (SIMD) computers have multiple processors that follow the same set of instructions, with each processor taking a different set of data. Essentially SIMD computers process lots of different data concurrently, using the same algorithm.

- Multiple instruction, multiple data (MIMD) computers have multiple processors, each of which is able to process instructions independently of the others. This means that a MIMD computer can truly process a number of different instructions simultaneously.

MIMD is probably the most common parallel computing architecture.

- All the processors in a parallel processing system act in the same way as standard single-core (von Neumann) CPUs, loading instructions and data from memory and acting accordingly.

- However, the different processors in a multicore system need to communicate continuously with each other in order to ensure that if one processor changes a key piece of data (for example, the players’ scores in a game), the other processors are aware of the change and can incorporate it into their calculations.

- There is a huge amount of additional complexity involved in implementing parallel processing, because when each separate core (processor) has completed its own task

- the results from all the cores need to be combined to form the complete solution to the original problem.

- Complexity may mean that processing is slowed down

- The additional time taken to co-ordinate communication between processors and combine their results into a single solution was greater than the time saved by sharing the workload.

No comments:

Post a Comment